Abstract

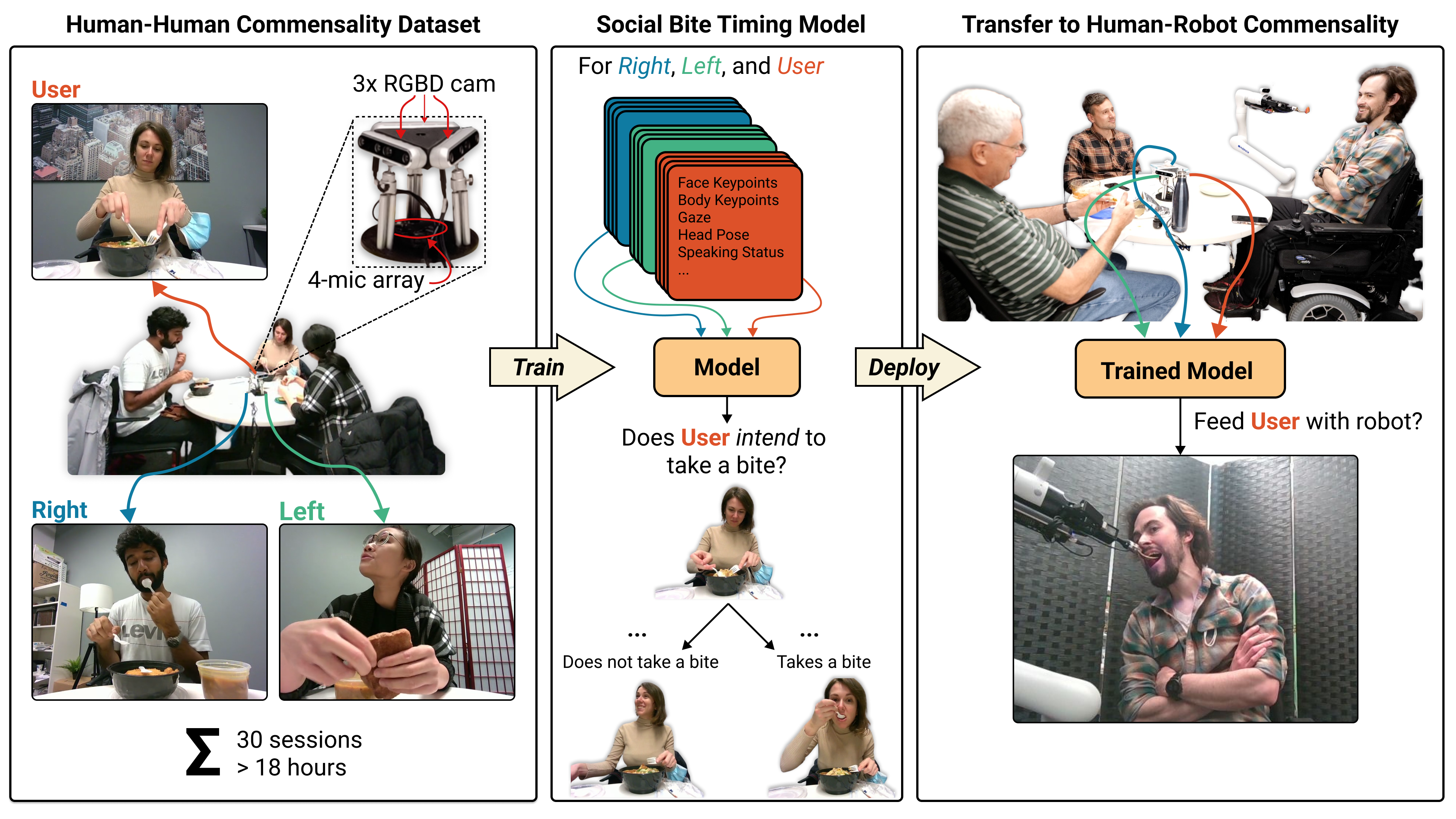

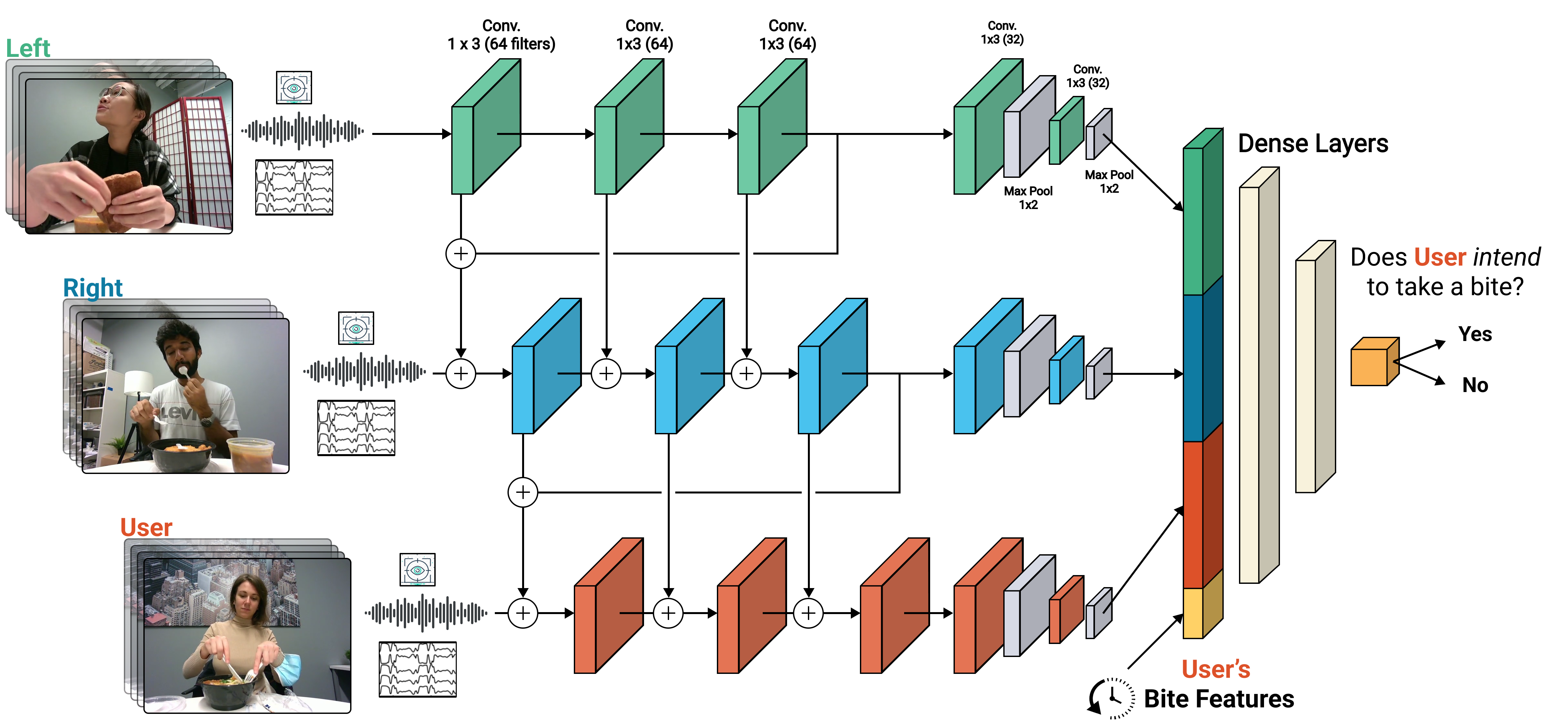

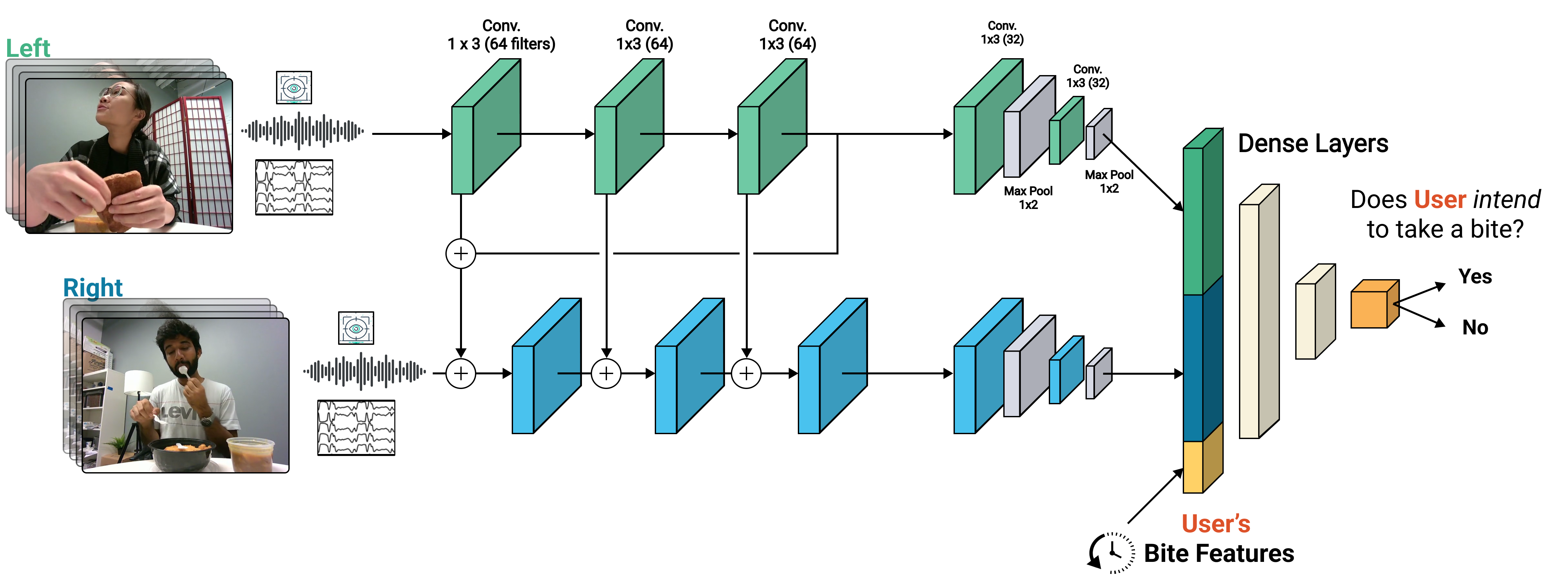

We develop data-driven models to predict when a robot should feed during social dining scenarios. Being able to eat independently with friends and family is considered one of the most memorable and important activities for people with mobility limitations. While existing robotic systems for feeding people with mobility limitations focus on solitary dining, commensality, the act of eating together, is often the practice of choice. Sharing meals with others introduces the problem of socially appropriate bite timing for a robot, i.e. the appropriate timing for the robot to feed without disrupting the social dynamics of a shared meal. Our key insight is that bite timing strategies that take into account the delicate balance of social cues can lead to seamless interactions during robot-assisted feeding in a social dining scenario. We approach this problem by collecting a Human-Human Commensality Dataset (HHCD) containing 30 groups of three people eating together. We use this dataset to analyze human-human commensality behaviors and develop bite timing prediction models in social dining scenarios. We also transfer these models to human-robot commensality scenarios.