System Overview

Our inside-mouth bite transfer system leverages two novel components

Robust Multi-View Mouth Perception

Training: We train our multi-view encoder in a self-supervised manner, using a self-curated dataset of unoccluded images and masks of utensils with various foods. We synthetically add occlusion and use an existing single-view method effective on the unoccluded original to generate ground truth supervision.

Testing: Our mouth perception method accurately identifies face keypoints, even in heavily occluded situations, and outperforms baselines from head perception and robot-assisted feeding literature.

Real-time multi-view mouth perception enables our robot to adapt to voluntary head movement by the user, pause mid-transfer if the user is not ready, and evade involuntary head movements such as muscle spasms.

We validate the necessity of this functionality in an ablation study with 14 participants, where we significantly outperform a baseline that only perceives once.

Physical Interaction-Aware Control

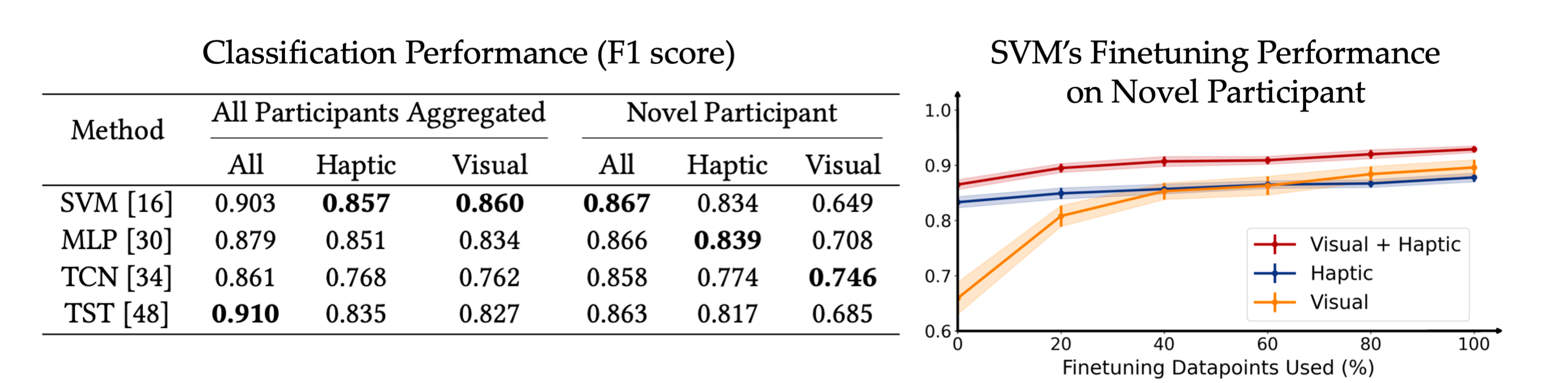

We take a data-driven approach to multimodal (visual + haptics) physical interaction classification, and evaluate four time-series models - Time-Series Transformers, Temporal Convolutional Networks, Multi-Layer Perceptrons and SVMs.

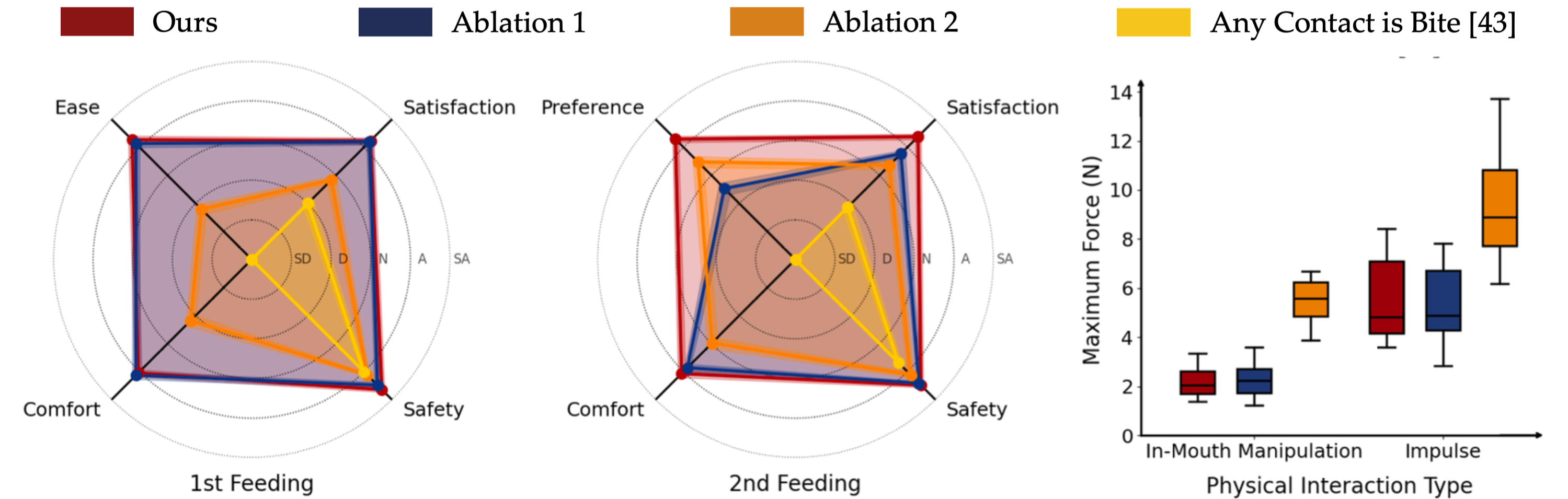

This physical-interaction aware controller enables us to detect and be very compliant to tongue guidance from a new care recipient we are feeding, and remember the subsequent bite location (Feeding 1), so that the next time we feed them we can autonomously move to the bite location, while being very complaint to impulsive motions if they occur (Feeding 2).

We validate this functionality in an ablation study with 14 participants, where we significantly outperform a baseline that perceives any contact as bite, and ablations of our method on various metrics, such as satisfaction, safety, comfort, and maximum force applied.

Full System Evaluation with Care Recipients

Users perceive our system to be safe and comfortable and view the technology favorably